FIREWALL: Firewall (en)

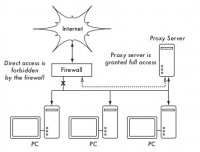

A more reliable method to ensure that a PC does not bypass a proxy is by using a firewall. The firewall can be configured to allow only the HTTP proxy server to make requests to the Internet. All other PCs are blocked, as shown in Figure 3.26.

Depending on the firewall configuration, relying solely on a firewall may or may not be sufficient. If it only blocks access from the LAN to port 80 on the web server, there will be ways for savvy users to circumvent it. Moreover, they could use other bandwidth-hungry protocols like BitTorrent or Kazaa.

Dual Network Cards

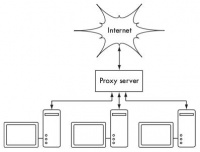

Perhaps the most reliable method is to install two network cards in the proxy server and connect the campus LAN network to the Internet as shown below. This network layout makes it physically impossible to reach the Internet without going through the proxy server.

The proxy server in this diagram should not enable IP forwarding, unless the administrator knows exactly what they want to allow through.

A major advantage of this design is the use of a technique known as transparent proxy. Using a transparent proxy means that user web requests are automatically forwarded to the proxy server, eliminating the need for users to manually configure their web browsers to use it. This effectively forces all traffic to go through the web cache, reducing many possible user errors, and even works with devices that do not support manual proxy settings. For more detailed information on configuring a transparent proxy with Squid, see:

Policy-Based Routing

One way to prevent proxy bypass using Cisco equipment is through policy routing. Cisco routers can transparently route web access requests to the proxy server. This technique is used at Makerere University. The advantage of this method is that, if the proxy server goes down, the routing policy can be temporarily removed, allowing clients to connect directly to the internet.

Full Site Mirroring

With permission from the owner or webmaster of a site, an entire site can be mirrored to a local server overnight, if it is not too large. This might need to be considered for websites important to an organization or very popular among web users. This may have some utility, but it also has potential dangers. For example, if the mirrored site contains CGI scripts or interactive dynamic content requiring user input, this could cause issues. One example is a site requiring people to register online for a conference. If someone registers on the mirror server (and the mirrored script is operational), then the original site's operator will not have information about those who registered.

Since site mirroring could infringe on copyrights, this technique can only be used with permission from the concerned site. If the site runs rsync, the site can use mirror rsync. This is likely the fastest and most efficient way to keep mirrored site content synchronized. If the remote web server does not run rsync, the recommended software to use is the wget program. This is part of most Unix / Linux versions. A Windows version can be seen at http://xoomer.virgilio.it/hherold/, or the free Unix tool package Cygwin (http://www.cygwin.com/).

A script can be set to run every night on a local web server and perform the following:

Change directory to the web server's document root: for example, /var/www/ on Unix, or C:\Inetpub\wwwroot on Windows.

Mirror the web site using the command:

wget --cache=off -m http://www.python.org

The mirrored web site will be in the www.python.org directory. The web server should now be configured to serve the contents of this directory as a name-based virtual host. Set up local server DNS entries for a fake site. For this to work, PC clients must be configured to use the local server DNS as their primary DNS. (This is advisable in any case, as a local caching server DNS will speed up web response times).

Pre-populate cache using wget

Rather than setting up a web mirror as described in the previous section, a better approach might be to pre-populate the proxy cache using an automated process. This method has been described by J.J. Eksteen and J.P.L. Cloete from CSIR in Pretoria, South Africa, in a paper titled "Enhancing International World Wide Web Access in Mozambique Through the Use of Mirroring and Caching Proxies". In this paper (available at http://www.isoc.org/inet97/ans97/cloet.htm) they explain how the process works:

"An automated process retrieves the site's homepage and a specified number of additional pages (recursively following HTML links on the retrieved pages) using a proxy. Rather than writing the retrieved pages to a local disk, the mirror process discards the retrieved pages. This is done in order to save system resources and to avoid potential copyright conflicts. By using a proxy as an intermediary, the pages retrieved are guaranteed to be cached by the proxy as if a client were accessing the pages. When a client accesses the retrieved pages, they are served from the cache and not through the congested international connection. This process can be run during off-peak times to maximize bandwidth utilization and not to compete with other access activities."

The following command (scheduled to run at night or once every week) is required (repeated again for each site requiring pre-population):

wget --proxy-on --cache=off --delete after -m http://www.python.org

The following options enable this:

-m: Mirror the entire site. wget starts at www.python.org and follows all hyperlinks, thus downloading all sub-pages. --proxy-on: ensures that wget uses the server proxy. This can be omitted if a transparent proxy is used. --cache=off: ensures that fresh content will be retrieved from the Internet, not locally from the server proxy. --delete after: Deletes the mirrored copy. The mirrored content remains in the proxy cache if there is enough disk space, and the proxy-caching server parameters are set correctly.

Additionally, wget has many other options; for example, to provide a password for websites that require it. When using this tool, squid should be configured with enough disk space to accommodate all pre-populated sites and others (for normal Squid use involving pages beyond the pre-population). Fortunately, hard drives are increasingly cheap and much larger than before. However, this technique can only be used with a few selected sites. These sites should not be too large to finish the process before the workday starts, and careful attention must be paid to the remaining hard drive space.

Cache Hierarchy

When an organization has more than one proxy server, proxy caches can share information among them. For example, if a web server page is in cache A but not in cache B, a user connected through server B might get the cached object from server A via server B. Inter-Cache Protocol (ICP) and Cache Array Routing Protocol (CARP) can share cache information. CARP is considered a better protocol. Squid supports both protocols, and MS ISA Server supports CARP. For more information, see http://squid-docs.sourceforge.net/latest/html/c2075.html. This sharing of cache information reduces bandwidth usage in organizations where more than one proxy is used.

Proxy Specifications

On a university campus network, there should be more than one proxy server, both for performance and for backup reasons. Today, with cheaper and larger hard drives, powerful proxy servers can be built, with 50 GB or more of hard disk space allocated for cache. Hard disk performance is crucial, so fast SCSI hard drives perform best (although an IDE-based Cache is better than none at all). RAID or mirroring is not recommended. It is also advisable to use a separate hard disk dedicated to the cache. For example, one hard disk for the cache, and a second hard disk for the operating system and cache logging. Squid is designed to use as much RAM as it can, because data retrieved from RAM is faster than if it comes from a hard disk. For a campus network, using 1GB of RAM or more is necessary:

- Besides the memory needed for the operating system and other applications, Squid requires 10 MB of RAM for every 1 GB of disk cache. Therefore, if there are 50 GB allocated for disk caching space, Squid will need an additional 500 MB of memory.

- The machine also needs 128 MB for Linux and 128 MB for Xwindows.

- Another 256 MB should be added for other applications and to ensure everything runs smoothly. The performance of the machine will increase significantly with the installation of large memory, as this reduces the need to use the hard disk. Memory is thousands of times faster than a hard disk. Modern operating systems often store frequently accessed data in memory if there is enough available RAM. However, they use a page file as additional memory when they do not have enough RAM.

Interesting Links

- WNDW

- Firewall: UFW

- Firewall: Fighting Ransomware (en)

- Firewall: Fighting Ransomware

- Network Design

- Network 101

- Introduction

- Communication Cooperation

- OSI Model

- TCP/IP Model

- Internet Protocol

- IP Addressing

- Subnet

- Public IP Address

- Static IP Address

- Dynamic IP Address

- Private IP Address

- Routing

- Network Address Translation (NAT)

- Internet Protocol Family

- Ethernet

- MAC Address

- Hub

- Switch

- Hub vs. Switch

- Routers and Firewalls

- Other Devices

- Bringing It All Together

- Designing Physical Networks

- Point-to-point

- Point-to-multipoint

- Multipoint-to-multipoint

- Using Suitable Technology

- Wireless Networks 802.11

- Mesh Network with OLSR

- Estimating Capacity

- Connection Planning

- Connection Planning Software

- Avoiding Noise

- Repeater

- Traffic Optimization

- Web Caching

- Proxy Server Products

- Preventing Users from Bypassing the Proxy Server

- Firewall

- DNS Caching and Optimization

- Optimizing Internet Connections

- TCP/IP Factors on Satellite Connections

- Enhancing Proxy Performance (PEP)

- More Information