Difference between revisions of "Orange: Neural Network"

Onnowpurbo (talk | contribs) (→Output) |

Onnowpurbo (talk | contribs) (→Output) |

||

| Line 13: | Line 13: | ||

Model: trained model | Model: trained model | ||

| − | Widget Neural Network menggunakan sklearn Multi-layer Perceptron algorithm yang dapat learn non-linear maupun linear model. Kita bisa mengatur activatio function yang digunakan (default ReLu), jumlah Neuron per hidden layer dll. | + | Widget Neural Network menggunakan sklearn Multi-layer Perceptron algorithm yang dapat learn non-linear maupun linear model. Kita bisa mengatur activatio function yang digunakan (default ReLu), jumlah Neuron per hidden layer Solver yang digunakan (default Adam) dll. |

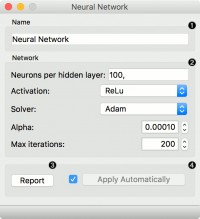

[[File:NeuralNetwork-stamped.png|center|200px|thumb]] | [[File:NeuralNetwork-stamped.png|center|200px|thumb]] | ||

Revision as of 10:26, 6 April 2020

Sumber:https://docs.biolab.si//3/visual-programming/widgets/model/neuralnetwork.html

Widget Neural Network sebuah multi-layer perceptron (MLP) algorithm dengan backpropagation.

Input

Data: input dataset Preprocessor: preprocessing method(s)

Output

Learner: multi-layer perceptron learning algorithm Model: trained model

Widget Neural Network menggunakan sklearn Multi-layer Perceptron algorithm yang dapat learn non-linear maupun linear model. Kita bisa mengatur activatio function yang digunakan (default ReLu), jumlah Neuron per hidden layer Solver yang digunakan (default Adam) dll.

- A name under which it will appear in other widgets. The default name is “Neural Network”.

- Set model parameters:

- Neurons per hidden layer: defined as the ith element represents the number of neurons in the ith hidden layer. E.g. a neural network with 3 layers can be defined as 2, 3, 2.

- Activation function for the hidden layer:

- Identity: no-op activation, useful to implement linear bottleneck

- Logistic: the logistic sigmoid function

- tanh: the hyperbolic tan function

- ReLu: the rectified linear unit function

- Solver for weight optimization:

- L-BFGS-B: an optimizer in the family of quasi-Newton methods

- SGD: stochastic gradient descent

- Adam: stochastic gradient-based optimizer

- Solver for weight optimization:

- Alpha: L2 penalty (regularization term) parameter

- Max iterations: maximum number of iterations

- Other parameters are set to sklearn’s defaults.

- Produce a report.

- When the box is ticked (Apply Automatically), the widget will communicate changes automatically. Alternatively, click Apply.

Contoh

Contoh berikut adalah task classification pada iris dataset. Kita membandingkan hasil dari widget Neural Network dengan widget Logistic Regression.

Contoh berikut adalah task prediction, menggunakan iris data. Workflow ini memperlihatkan bagaimana cara menggunakan keluaran Learner. Kita memasukan widget Neural Network prediction model ke widget Predictions dan mengamati nilai hasil prediksi.

Contoh WorkFlow lainnya,