Orange: Word Cloud dari File Text

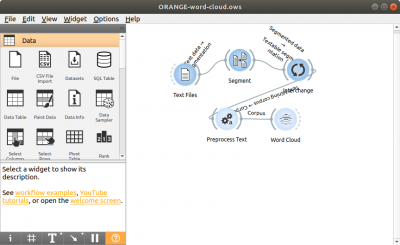

Word Cloud data dapat di bangun dari file text (ASCII) yang kita miliki seperti pada workflow di bawah ini. Pertama-tama data dari Widget Text Files harus di segmented menjadi word menggunakan Widget Segment. Kemudian output segmented data perlu di konversikan dari segmented data menjadi corpus agar bisa di proses oleh toolbox text mining menggunakan Widget Interchange. Sebelum di tampilkan sebagai word cloud ada baiknya dilakukan preprocessing terlebih dulu, untuk mengurangi berbagai kata yang tidak dibutuhkan, seperti kata penghubungi dll menggunakan Widget Preprocess Text.

The workflow in Orange Data Mining shown in the image follows a text processing and visualization approach using a word cloud. Here’s the step-by-step breakdown:

1. Text Files (Loading Data)

- The Text Files widget is used to load text data from multiple files.

- These files contain textual information that will be analyzed.

2. Segment (Splitting Text into Segments)

- The Segment widget is used to split the text data into meaningful segments (e.g., sentences, paragraphs, or predefined sections).

- This step helps in structuring the data for further processing.

3. Preprocess Text (Cleaning and Normalization)

- The Preprocess Text widget processes the segmented text.

- Common preprocessing steps include:

- Tokenization (splitting text into words),

- Removing stopwords (common words like "the", "is", etc.),

- Stemming or lemmatization (reducing words to their base form).

- This prepares the text data for analysis.

4. Word Cloud (Visualizing Key Words)

- The Word Cloud widget generates a word cloud visualization.

- The most frequently occurring words appear larger, helping in identifying key terms and patterns in the dataset.

Summary

This Orange Data Mining workflow loads text files, segments the data, preprocesses the text for better readability, and visualizes the most frequent words using a word cloud. It is useful for text mining, exploratory text analysis, and keyword extraction.

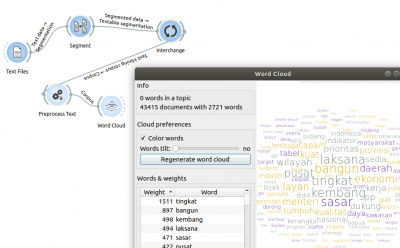

The Orange Data Mining workflow in the image follows a text mining approach, processing large amounts of textual data and visualizing it through a Word Cloud. Here’s the breakdown:

Workflow Steps:

1. Text Files (Loading Data)

- The Text Files widget loads text data from multiple documents.

- The dataset contains 43,415 documents with 2,721 unique words.

2. Segment (Splitting Text into Meaningful Parts)

- The Segment widget splits the text into segments, such as sentences or paragraphs.

- This segmentation helps in structuring the text for further analysis.

3. Interchange (Managing Data Flow)

- The Interchange widget allows for interaction between different text*processing components.

- It ensures that segmented data is correctly passed to subsequent processing steps.

4. Preprocess Text (Cleaning and Normalization)

- The Preprocess Text widget processes the text by:

- Tokenizing (splitting text into words),

- Removing stopwords (common, less meaningful words),

- Stemming or Lemmatization (reducing words to their root forms).

- This step improves the clarity of data before visualization.

5. Word Cloud (Visualizing Important Words)

- The Word Cloud widget generates a visual representation of word frequency.

- More frequently occurring words appear larger, while less common words appear smaller.

Word Cloud Output in the Image:

- The Word Cloud visualizes frequently used words from the dataset.

- The top words in the dataset (along with their frequency) include:

- "tingkat" (1511 occurrences)

- "bangun" (897 occurrences)

- "kembang" (898 occurrences)

- "laksana" (494 occurrences)

- "sasar" (471 occurrences)

- The cloud is color-coded to improve readability.

Summary:

This Orange Data Mining workflow loads text documents, segments them, preprocesses the text, and generates a word cloud. The word cloud output provides insights into the most frequently used words, making it useful for text mining, keyword extraction, and trend analysis.

Workflow in Orange Data Mining (Text Processing and Word Cloud Analysis)

This Orange Data Mining workflow follows a text processing and visualization pipeline, mainly focused on preprocessing textual data and analyzing frequent words using a Word Cloud. Here’s the breakdown:

1. Workflow Steps:

1. Text Files (Loading Data)

- The Text Files widget loads a dataset containing text documents.

- The dataset consists of 43,415 documents and 2,721 unique word types.

2. Segment (Splitting Text into Meaningful Parts)

- The Segment widget processes and structures text data into smaller components such as sentences, paragraphs, or predefined segments.

3. Interchange (Managing Data Flow)

- The Interchange widget ensures the segmented text is passed correctly between different components in the workflow.

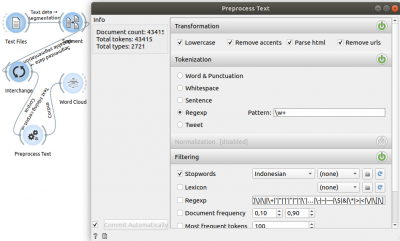

4. Preprocess Text (Cleaning and Normalization)

- The Preprocess Text widget applies various text processing techniques to clean and filter the data.

5. Word Cloud (Visualization)

- The Word Cloud widget generates a visual representation of word frequencies.

- More frequently occurring words appear larger in the cloud.

2. Detailed Parameters in "Preprocess Text" Widget

The Preprocess Text widget performs several key transformations and filtering steps:

A. Transformation (Text Cleaning)

- Lowercase: Converts all text to lowercase.

- Remove accents: Removes diacritical marks from words.

- Parse HTML: Extracts meaningful text from HTML.

- Remove URLs: Eliminates any hyperlinks from the text.

B. Tokenization (Splitting Text into Words) - Regexp-based tokenization is selected with the pattern `\w+`:

- This ensures that only alphanumeric words (letters and numbers) are extracted, excluding punctuation.

C. Filtering (Removing Unwanted Words)

- Stopwords Removal: Uses the Indonesian stopword list to remove common words like "dan," "di," "ke," etc.

- Regexp Filtering: Applies a regular expression filter to exclude certain symbols and non-word characters.

- Document Frequency Filtering:

- Minimum document frequency: 0.10 (Words appearing in less than 10% of documents are removed).

- Maximum document frequency: 0.90 (Words appearing in more than 90% of documents are removed).

- Most Frequent Tokens: The top 100 words are retained for analysis.

Summary

This Orange Data Mining workflow processes a large textual dataset (43,415 documents), cleans the text using preprocessing techniques, and generates a Word Cloud visualization. Key preprocessing steps include removing accents, URLs, stopwords, and punctuation, applying regular expressions for tokenization, and filtering words based on document frequency. This workflow is ideal for text mining, topic modeling, and keyword analysis in Indonesian-language datasets.

Pada Widget Preprocess Text kita dapat melakukan beberapa hal, seperti

- Mengubah agar semua huruf menjadi huruf kecil.

- Menghilangkan (stop word), kata-kata yang kurang bermanfaat seperti, kata penghubung seperti dan, di, ke, dari dll.

- Mengatur agar pemrosesan stopword dalam bahasa Indonesia.

- Menghilangkan tag HTML

- Menghilangkan URL

- dll.