Hadoop: Ecosystem

Sumber: http://www.bogotobogo.com/Hadoop/BigData_hadoop_Ecosystem.php

Why Hadoop? - Volume, Velocity, and Variety (3 Vs)

- Volume - cheaper : scale to Petabytes or more

- Velocity - Faster : parallel data processing

- Variety - Better : suited for particular types of BigData problems

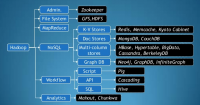

Ecosystem

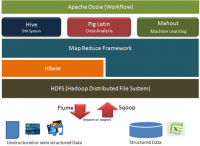

Image source Hadoop Ecosystem

Samples

Picture source: Apache Hadoop Ecosystem

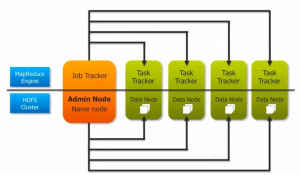

Hadoop consists of two main pieces, HDFS and MapReduce. The MapReduce is the processing part of Hadoop and the MapReduce server on a typical machine is called a TaskTracker. HDFS is the data part of Hadoop and the HDFS server on a a typical machine is called a DataNode.

MapReduce needs a coordinator which is called a JobTracker. Regardless of how large the cluster is we need a signle JobTracker. The JobTracker is responsible for acceting user's job, dividing it into tasks and assigning it to individual TaskTracker. Then, runs the task and reports the status as it runs and completes. The JobTracker is also responsible for noticing if the TaskTracker dispears because of software failure or hardware failure. If it's gone away, the JobTracker automatically assigns the task to another TaskTracker.

The NameNode takes the similar role to HDFS as the JobTracker does to the MapReduce.

However, since the project was first started, lots of other software has been built around it. And that's what we call Hadoop Ecosystem. Some of the software are intended to make it easier to load data into the Hadoop cluster.

Well, lots of them designed to make Hadoop easier to use. In fact, writing Map reduced code isn't that simple. We need to write a programming language such as Java, Python, or Ruby. But quite a few people out there who aren't programmers, but can write SQL queries to access data in a traditional relational database system, like SQL Server. And, of course, lots of business tools are used to hook into Hadoop.

Therefore, several open source projects have been created to make it easier for people to query their data without knowing how to code.

Instead of having to write macros and reducers, in Hive we just write a statement which looks very much like standard SQL query:

SELECT * FROM ...

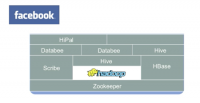

The Hive interpreter turns the SQL into map produced code, which then runs on the cluster. Facebook uses it extensively and about 90% of their computation.

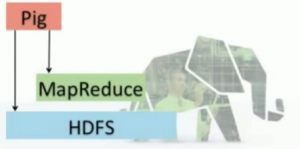

An alternative is Pig, which allows us to write code to analyse our data in a fairly simple scripting language, rather than map reduce. It is a high-level language for routing data developed at Yahoo, and it allows easy integration of Java for complex tasks.

Image source : Introduction to Apache Hadoop

The code is just turned into map reduce and run on a cluster. It works like a compiler which translates our program into an assembly. So, the Pig does the same thing for MapReduce jobs.

Though Hive and Pig are great, they're still running map reduce jobs, and can take a reasonable around of time to run, especially over large amounts of data.

Impala

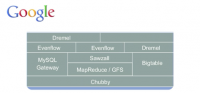

That's why we have another open source project called Impala. It was developed as a way to query our data with SQL, but which directly accesses the data in HDFS rather than needing map reduce. Impala is optimized for low latency queries. In other words, Impala queries run very quickly, typically, many times faster than Hive, while Hive is optimized for running long batch processing jobs.

Sqoop

Another project used by many people is Sqoop. Sqoop takes data from a traditional relational database, such as Microsoft SQL Server, and puts it in HDFS, as the limited files. So, it can be processed along with other data on the cluster.

Flume

Then, there's Flume. Flume is for streaming data into Hadoop. It injests data as it's generated by external systems, and puts it into the cluster. So, if we have servers generating data continuously, we can use Flume.

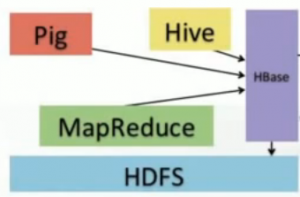

HBase is a real time database, built on top of HDFS. It's a column-family store based on the Google's BigTable design.

Image source : Introduction to Apache Hadoop

We need to read/write data in real time and HBase is a top-level Apache project meets that need. It provides a simple interface to our distributed data that allows incremental processing. HBase can be accessed by Hive and Pig by MapReduce and stores that information in its HDFS and it's guaranteed to be reliable and durable. HBase is used for applications such as Facebook messages.

KijiSchema provides a simple Java API and command line interface for importing, managing, and retrieving data from HBase by setting up HBase layouts using user-friendly tools including a DDL (data definition language or data description language). HBaseAPIs.png

Picture source: A Hadoop Ecosystem Overview: Including HDFS, MapReduce, Yarn, Hive, Pig, and HBase.

Hive_Icon.png Hive.png

Image source : Hadoop Tutorial: Apache Hive

Hive is a data warehouse system layer built on Hadoop. It allows us to define a structure for our unstructured Big Data. With a HiveQL which is an SQL-like scripting languages, we can simplify analysis and queries.

Note that Hive is NOT a database but uses a database to store metadata. The data that Hive processes is stored in HDFS. Hive runs on Hadoop and is NOT designed for on-line transaction processing because the latency for Hive queries is generally high. Therefore, Hive is NOT suited for real-time queries. Hive is best used for batch jobs over large sets of immutable data such as web logs.

Hue is a graphical front end to the questor.

Oozie is a workflow scheduler tool it provides workflow/coordination service to manage Hadoop jobs. So, we define when we want our MapReduce jobs to run and Oozie will fire them up automatically. It also will trigger when data becomes available. It was developed at Yahoo.

Mahout is a library for scalable machine learning and data mining.

Avro is a Serialization and RPC framework.

In fact, there are so many ecosystem projects that making them all talk to one another, and work well, can be tricky. To make installing and maintaining a cluster like this easier, a company such as Cloudera, has put together a distribution of HADOOP called CDH (Cloudera distribution including a patchy HADOOP) takes all the key ecosystem projects, along with Hadoop itself, and packages them together so that installation is a really easy process. It's a free and open source, just like Hadoop itself. While we could install everything from scratch, it's far easier to use CDH.

ZooKeeper allows distributed processes to coordinate with each other through a shared hierarchical name space of data registers. - Zookeeper wiki Zookeeper.png Hadoop_Zookeeper.png

Image source : Introduction to Apache Hadoop

Kafka Kafka is a distributed, partitioned, replicated commit log service. It provides the functionality of a messaging system, but with a unique design.

Kafka.png

Kafka_ZooKeeper.png

Source: Simulating and transporting Realtime event stream with Apache Kafka

Data center Orchestration - Mesos Mesos.png

Image source: Apache Mesos

Mesos is built using the same principles as the Linux kernel, only at a different level of abstraction. The Mesos kernel runs on every machine and provides applications (e.g., Hadoop, Spark, Kafka, Elastic Search) with API's for resource management and scheduling across entire datacenter and cloud environments. Mesos-DataCenter.png

Image source: Mesos Orchestrates a Data Center Like One Big Computer