Difference between revisions of "Orange: kNN"

Jump to navigation

Jump to search

Onnowpurbo (talk | contribs) |

Onnowpurbo (talk | contribs) |

||

| (5 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

sumber: https://docs.orange.biolab.si/3/visual-programming/widgets/model/knn.html | sumber: https://docs.orange.biolab.si/3/visual-programming/widgets/model/knn.html | ||

| − | + | Prediksi berdasarkan instance training terdekat. | |

| − | + | ==Input== | |

| − | + | Data: input dataset | |

| − | + | Preprocessor: preprocessing method(s) | |

| − | + | ==Output== | |

| − | + | Learner: kNN learning algorithm | |

| − | + | Model: trained model | |

| − | + | Widget kNN menggunakan algoritma kNN yang akan mencari k instance training terdekat di ruang feature dan menggunakan rata-rata feature terdekat tersebut untuk mem-prediksi. | |

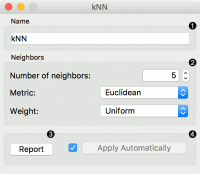

[[File:KNN-stamped.png|center|200px|thumb]] | [[File:KNN-stamped.png|center|200px|thumb]] | ||

| − | + | * A name under which it will appear in other widgets. The default name is “kNN”. | |

| − | + | * Set the number of nearest neighbors, the distance parameter (metric) and weights as model criteria. | |

| − | + | ** Metric can be: | |

| − | + | *** Euclidean (“straight line”, distance between two points) | |

| − | + | *** Manhattan (sum of absolute differences of all attributes) | |

| − | + | *** Maximal (greatest of absolute differences between attributes) | |

| − | + | *** Mahalanobis (distance between point and distribution). | |

| − | + | ** The Weights you can use are: | |

| − | + | *** Uniform: all points in each neighborhood are weighted equally. | |

| − | + | *** Distance: closer neighbors of a query point have a greater influence than the neighbors further away. | |

| − | |||

| − | + | * Produce a report. | |

| + | * When you change one or more settings, you need to click Apply, which will put a new learner on the output and, if the training examples are given, construct a new model and output it as well. Changes can also be applied automatically by clicking the box on the left side of the Apply button. | ||

==Contoh== | ==Contoh== | ||

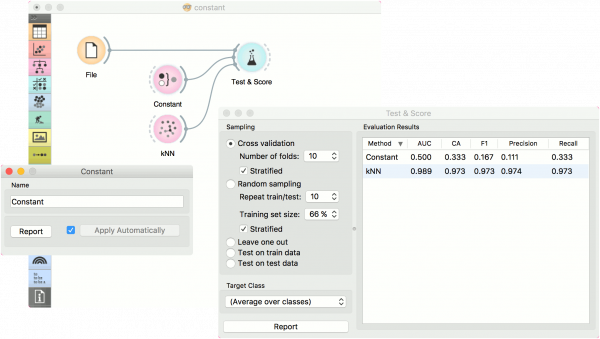

| − | + | Contoh pertama adalah task klasifikasi pada dataset iris. Kita bandingkan hasil dari k-Nearest neighbors dengan default model Constant, yang akan memprediksi class majoritas. | |

| − | [[File:Constant-classification.png|center| | + | [[File:Constant-classification.png|center|600px|thumb]] |

| − | |||

| − | |||

| − | |||

| − | |||

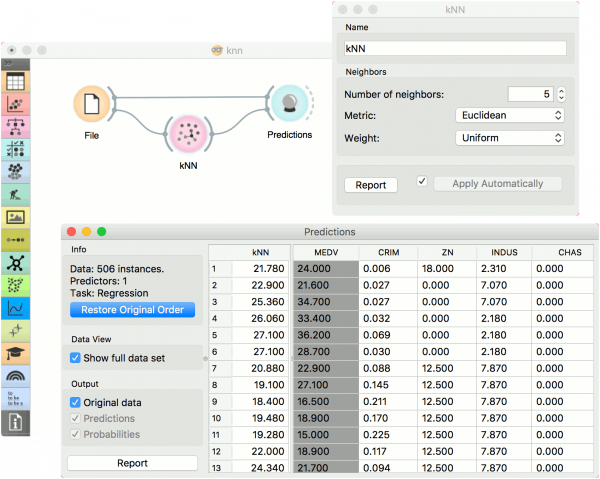

| + | Contoh kedua adalah task regresi. Workflow ini menunjukkan cara menggunakan Learner output. Untuk tujuan contoh ini, kita menggunakan dataset housing. Kita memasukkan model prediksi kNN ke dalam Predictions dan mengamati nilai yang diprediksi. | ||

| + | [[File:KNN-regression.png|center|600px|thumb]] | ||

| + | ==Youtube== | ||

| + | * [https://www.youtube.com/watch?v=vi0kMTmVL_E YOUTUBE: ORANGE model kNN] | ||

==Referensi== | ==Referensi== | ||

* https://docs.orange.biolab.si/3/visual-programming/widgets/model/knn.html | * https://docs.orange.biolab.si/3/visual-programming/widgets/model/knn.html | ||

| − | |||

==Pranala Menarik== | ==Pranala Menarik== | ||

* [[Orange]] | * [[Orange]] | ||

Latest revision as of 05:55, 11 April 2020

sumber: https://docs.orange.biolab.si/3/visual-programming/widgets/model/knn.html

Prediksi berdasarkan instance training terdekat.

Input

Data: input dataset Preprocessor: preprocessing method(s)

Output

Learner: kNN learning algorithm Model: trained model

Widget kNN menggunakan algoritma kNN yang akan mencari k instance training terdekat di ruang feature dan menggunakan rata-rata feature terdekat tersebut untuk mem-prediksi.

- A name under which it will appear in other widgets. The default name is “kNN”.

- Set the number of nearest neighbors, the distance parameter (metric) and weights as model criteria.

- Metric can be:

- Euclidean (“straight line”, distance between two points)

- Manhattan (sum of absolute differences of all attributes)

- Maximal (greatest of absolute differences between attributes)

- Mahalanobis (distance between point and distribution).

- Metric can be:

- The Weights you can use are:

- Uniform: all points in each neighborhood are weighted equally.

- Distance: closer neighbors of a query point have a greater influence than the neighbors further away.

- The Weights you can use are:

- Produce a report.

- When you change one or more settings, you need to click Apply, which will put a new learner on the output and, if the training examples are given, construct a new model and output it as well. Changes can also be applied automatically by clicking the box on the left side of the Apply button.

Contoh

Contoh pertama adalah task klasifikasi pada dataset iris. Kita bandingkan hasil dari k-Nearest neighbors dengan default model Constant, yang akan memprediksi class majoritas.

Contoh kedua adalah task regresi. Workflow ini menunjukkan cara menggunakan Learner output. Untuk tujuan contoh ini, kita menggunakan dataset housing. Kita memasukkan model prediksi kNN ke dalam Predictions dan mengamati nilai yang diprediksi.