Difference between revisions of "Orange: Tree"

Onnowpurbo (talk | contribs) (Created page with "Sumber: https://docs.biolab.si//3/visual-programming/widgets/model/tree.html A tree algorithm with forward pruning. Inputs Data: input dataset Preprocessor: prep...") |

Onnowpurbo (talk | contribs) (→Contoh) |

||

| (7 intermediate revisions by the same user not shown) | |||

| Line 3: | Line 3: | ||

| − | + | Widget Tree menggunakan algoritma Tree dengan kemampuan untuk melakukan forward pruning (pemangkasan ke depan). | |

| − | + | ==Input== | |

| − | + | Data: input dataset | |

| + | Preprocessor: preprocessing method(s) | ||

| − | + | ==Output== | |

| − | + | Learner: decision tree learning algorithm | |

| + | Model: trained model | ||

| − | + | Algoritma Tree adalah algoritma sederhana yang dapat memisahkan data menjadi node berdasarkan class purity (kemurnian kategori / class). Algoritma Tree adalah pendahulu Algoritma Random Forest. Widget Tree dalam Orange dirancang secara in-house dan dapat menangani dataset diskrit dan kontinyu. | |

| − | + | Widget Tree dapat digunakan untuk task classification dan task regression. | |

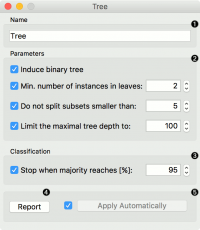

| − | Tree | + | [[File:Tree-stamped.png|center|200px|thumb]] |

| − | + | * The learner can be given a name under which it will appear in other widgets. The default name is “Tree”. | |

| + | * Tree parameters: | ||

| + | ** Induce binary tree: build a binary tree (split into two child nodes) | ||

| + | ** Min. number of instances in leaves: if checked, the algorithm will never construct a split which would put less than the specified number of training examples into any of the branches. | ||

| + | ** Do not split subsets smaller than: forbids the algorithm to split the nodes with less than the given number of instances. | ||

| + | ** Limit the maximal tree depth: limits the depth of the classification tree to the specified number of node levels. | ||

| − | .. | + | * Stop when majority reaches [%]: stop splitting the nodes after a specified majority threshold is reached |

| + | * Produce a report. After changing the settings, you need to click Apply, which will put the new learner on the output and, if the training examples are given, construct a new classifier and output it as well. * Alternatively, tick the box on the left and changes will be communicated automatically. | ||

| − | + | ==Contoh== | |

| − | + | Ada dua penggunaan yang biasanya digunakan pada widget Tree. Pertama, kita dapat meng-induksi sebuah model dan cek menggunakan tampilan yang seperti widget Tree Viewer. | |

| − | + | [[File:Tree-classification-visualize.png|center|200px|thumb]] | |

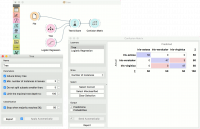

| − | + | Skema ke dua di workflow di bawah ini, widget Tree akan men-train sebuah model dan mengevaluasinya terhadap Logistic Regression. Perbandingan keakuratan widget Tree dan widget Logistic Regression di lakukan melalui widget Test & Score. Hasil widget Test & Score dapat dilihat melalui widget Confusion Matrix untuk membandingkan kesalahan klasifikasi yang terjadi. | |

| − | + | [[File:Tree-classification-model.png|center|200px|thumb]] | |

| − | + | Kita menggunakan iris dataset dalam ke dua contoh. Widget Tree dapat bekerja untuk task regression. Pada workflow di bawah ini menggunakan housing dataset dan berikan dataset ke widget Tree. Tree node yang di pilih dalam Tree Viewer akan di tampilkan di Scatter Plot dan kita bisa melihat bahwa contoh yang di pilih memiliki feature yang sama. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | [[File:Tree-regression-subset.png|center|200px|thumb]] | ||

==Referensi== | ==Referensi== | ||

Latest revision as of 11:26, 19 March 2020

Sumber: https://docs.biolab.si//3/visual-programming/widgets/model/tree.html

Widget Tree menggunakan algoritma Tree dengan kemampuan untuk melakukan forward pruning (pemangkasan ke depan).

Input

Data: input dataset Preprocessor: preprocessing method(s)

Output

Learner: decision tree learning algorithm Model: trained model

Algoritma Tree adalah algoritma sederhana yang dapat memisahkan data menjadi node berdasarkan class purity (kemurnian kategori / class). Algoritma Tree adalah pendahulu Algoritma Random Forest. Widget Tree dalam Orange dirancang secara in-house dan dapat menangani dataset diskrit dan kontinyu.

Widget Tree dapat digunakan untuk task classification dan task regression.

- The learner can be given a name under which it will appear in other widgets. The default name is “Tree”.

- Tree parameters:

- Induce binary tree: build a binary tree (split into two child nodes)

- Min. number of instances in leaves: if checked, the algorithm will never construct a split which would put less than the specified number of training examples into any of the branches.

- Do not split subsets smaller than: forbids the algorithm to split the nodes with less than the given number of instances.

- Limit the maximal tree depth: limits the depth of the classification tree to the specified number of node levels.

- Stop when majority reaches [%]: stop splitting the nodes after a specified majority threshold is reached

- Produce a report. After changing the settings, you need to click Apply, which will put the new learner on the output and, if the training examples are given, construct a new classifier and output it as well. * Alternatively, tick the box on the left and changes will be communicated automatically.

Contoh

Ada dua penggunaan yang biasanya digunakan pada widget Tree. Pertama, kita dapat meng-induksi sebuah model dan cek menggunakan tampilan yang seperti widget Tree Viewer.

Skema ke dua di workflow di bawah ini, widget Tree akan men-train sebuah model dan mengevaluasinya terhadap Logistic Regression. Perbandingan keakuratan widget Tree dan widget Logistic Regression di lakukan melalui widget Test & Score. Hasil widget Test & Score dapat dilihat melalui widget Confusion Matrix untuk membandingkan kesalahan klasifikasi yang terjadi.

Kita menggunakan iris dataset dalam ke dua contoh. Widget Tree dapat bekerja untuk task regression. Pada workflow di bawah ini menggunakan housing dataset dan berikan dataset ke widget Tree. Tree node yang di pilih dalam Tree Viewer akan di tampilkan di Scatter Plot dan kita bisa melihat bahwa contoh yang di pilih memiliki feature yang sama.