Orange: Topic Modelling

Sumber: https://orange3-text.readthedocs.io/en/latest/widgets/topicmodelling-widget.html

Widget Topic Modelling melakukan Topic modelling menggunakan Latent Dirichlet Allocation (LDA), Latent Semantic Indexing (LSI) atau Hierarchical Dirichlet Process (HDP).

Input

Corpus: A collection of documents.

Output

Corpus: Corpus with topic weights appended. Topics: Selected topics with word weights. All Topics: Token weights per topic.

Widget Topic Modelling menemukan topik-topik abstrak dalam sebuah corpus berdasarkan kumpulan kata-kata yang ditemukan dalam setiap dokumen dan frekuensi masing-masing. Sebuah dokumen biasanya berisi banyak topik dalam proporsi berbeda, sehingga widget tersebut juga melaporkan bobot topik per dokumen.

Widget Topic Modelling membungkus gensim topic model (LSI, LDA, HDP).

Latent Semantic Indexing (LSI), dapat memberikan kata-kata positif dan negatif (kata-kata yang ada dalam topik dan yang tidak) dan bersamaan dengan bobot topik, yang bisa positif atau negatif. Seperti yang dinyatakan oleh pengembang gensim utama, Radim Řehůřek: “LSI topics are not supposed to make sense; since LSI allows negative numbers, it boils down to delicate cancellations between topics and there’s no straightforward way to interpret a topic.”

Latent Dirichlet Allocation (LDA) lebih mudah di interpretasikan, akan tetapi lebih lambat di bandingkan Latent Semantic Indexing (LSI). Hierarchical Dirichlet Process (HDP) mempunyai banyak parameter - parameter yang sesuai dengan jumlah topik adalah Top level truncation level (T). Jumlah topik terkecil yang dapat diambil seseorang adalah 10.

- Topic modelling algorithm:

- Latent Semantic Indexing. Returns both negative and positive words and topic weights.

- Latent Dirichlet Allocation

- Hierarchical Dirichlet Process

- Parameters for the algorithm. LSI and LDA accept only the number of topics modelled, with the default set to 10.

HDP, however, has more parameters. As this algorithm is computationally very demanding, we recommend you to try it on a subset or set all the required parameters in advance and only then run the algorithm (connect the input to the widget).

- First level concentration (γ): distribution at the first (corpus) level of Dirichlet Process

- Second level concentration (α): distribution at the second (document) level of Dirichlet Process

- The topic Dirichlet (α): concentration parameter used for the topic draws

- Top level truncation (Τ): corpus-level truncation (no of topics)

- Second level truncation (Κ): document-level truncation (no of topics)

- Learning rate (κ): step size

- Slow down parameter (τ)

- Produce a report.

- If Commit Automatically is on, changes are communicated automatically. Alternatively press Commit.

Contoh

Explorasi Topik Individual

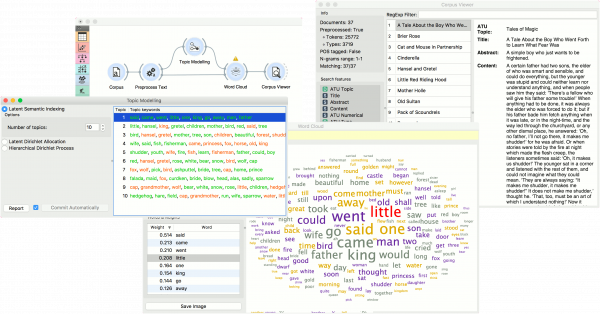

Dalam contoh ini, diperlihatkan penggunaan sederhana widget Topic Modelling. Pertama-tama kita load kumpulan data grimm-tales-selected.tab dan menggunakan widget Preprocess Text untuk tokenize hanya dengan kata-kata dan menghapus stopword. Kemudian kami menghubungkan widget Preprocess Text ke widget Topic Modelling, di mana kita menggunakan Latent Semantic Indexing (LSI) sederhana untuk menemukan 10 topik dalam text.

Latent Semantic Indexing (LSI) memberikan bobot positif dan negatif per topik. Bobot positif berarti kata yang digunakan sangat mewakili suatu topik, sedangkan bobot negatif berarti kata tersebut sangat tidak mewakili topik (semakin sedikit hal itu terjadi dalam text, semakin besar kemungkinan topik itu). Kata-kata positif berwarna hijau dan kata-kata negatif berwarna merah.

Kita kemudian memilih topik pertama dan menampilkan kata-kata paling sering dalam topik di widget Word Cloud. Kita juga menghubungkan widget Preprocess Text ke widget Word Cloud agar dapat menampilkan dokumen yang dipilih. Sekarang kita dapat memilih kata tertentu di widget Word Cloud, katakan 'little'. Ini akan berwarna merah dan juga di highlight dalam daftar kata di sebelah kiri. Selanjutnya, kita dapat mengamati dokumen berisi kata 'little' di widget Corpus Viewer.

Topic Visualization

In the second example, we will look at the correlation between topics and words/documents. We are still using the grimm-tales-selected.tab corpus. In Preprocess Text we are using the default preprocessing, with an additional filter by document frequency (0.1 - 0.9). In Topic Modelling we are using LDA model with 5 topics.

Connect Topic Modelling to MDS. Ensure the link is set to All Topics - Data. Topic Modelling will output a matrix of word weights by topic.

In MDS, the points are now topics. We have set the size of the points to Marginal topic probability, which is an additional columns of All Topics - it reports on the marginal probability of the topic in the corpus (how strongly represented is the topic in the corpus).

We can now explore which words are representative for the topic. Select, say, Topic 5 from the plot and connect MDS to Box Plot. Make sure the output is set to Data - Data (not Selected Data - Data).

In Box Plot, set the subgroup to Selected and check the Order by relevance to subgroups box. This option will sort the variables by how well they separate between the selected subgroup values. In our case, this means which words are the most representative for the topic we have selected in the plot (subgroup Yes means selected).

We can see that little, children and kings are the most representative words for Topic 5, with good separation between the word frequency for this topic and all the others. Select other topics in MDS and see how the Box Plot changes.