Difference between revisions of "Orange: Rank"

Onnowpurbo (talk | contribs) |

Onnowpurbo (talk | contribs) |

||

| (9 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

| − | + | Widget Rank menghitung pemeringkatan atribut dalam klasifikasi atau regresi data. | |

| − | + | ==Input== | |

| − | + | Data: input dataset | |

| + | Scorer: models for feature scoring | ||

| − | + | ==Output== | |

| − | + | Reduced Data: dataset with selected attributes | |

| − | + | Widget Rank mempertimbangkan kumpulan data berlabel class (klasifikasi atau regresi) dan menilai atribut sesuai dengan korelasinya dengan class. Peringkat juga menerima model untuk penilaian, seperti linear regression, logistic regression, random forest, SGD, dll. | |

| − | + | [[File:Rank-stamped.png|center|600px|thumb]] | |

| − | + | * Select attributes from the data table. | |

| + | * Data table with attributes (rows) and their scores by different scoring methods (columns) | ||

| + | * Produce a report. | ||

| + | * If ‘Send Automatically’ is ticked, the widget automatically communicates changes to other widgets. | ||

| − | + | ==Scoring method== | |

| − | + | Information Gain: the expected amount of information (reduction of entropy) | |

| − | + | * Gain Ratio: a ratio of the information gain and the attribute’s intrinsic information, which reduces the bias towards multivalued features that occurs in information gain | |

| + | * Gini: the inequality among values of a frequency distribution | ||

| + | * ANOVA: the difference between average vaules of the feature in different classes | ||

| + | * Chi2: dependence between the feature and the class as measure by the chi-square statistic | ||

| + | * ReliefF: the ability of an attribute to distinguish between classes on similar data instances | ||

| + | * FCBF (Fast Correlation Based Filter): entropy-based measure, which also identifies redundancy due to pairwise correlations between features | ||

| − | + | Selain itu, kita dapat menghubungkan learner tertentu yang memungkinkan penilaian feature berdasarkan seberapa penting mereka dalam model yang dibuat learner (mis. Linear Regression / Logistic Regression, Random Forest, SGD). | |

| − | + | ==Example: Attribute Ranking and Selection== | |

| − | + | Di bawah ini, kita menggunakan widget Rank segera setelah widget File untuk mengurangi set atribut data dan hanya menyertakan yang paling informatif: | |

| − | + | [[File:Rank-Select-Schema.png|center|600px|thumb]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | Perhatikan bagaimana widget Rank menghasilkan set data yang hanya menyertakan atribut dengan skor terbaik: | |

| − | |||

| − | + | [[File:Rank-Select-Widgets.png|center|600px|thumb]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | [[File:Rank-Select-Widgets.png|center| | ||

==Example: Feature Subset Selection for Machine Learning== | ==Example: Feature Subset Selection for Machine Learning== | ||

| − | + | Contoh yang lebih rumit dari widget Rank, dalam workflow di bawah ini, kita pertama-tama membagi data menjadi satu set train dan satu set test. Di cabang atas, data training melewatikan ke widget Rank untuk memilih atribut yang paling informatif, sedangkan di cabang bawah tidak ada pemilihan feature. Baik set data feature yang dipilih dan original dilewatkan ke widget Test & Score mereka sendiri, yang mengembangkan classifier widget Naive Bayes dan menilainya pada set test. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | Untuk dataset dengan banyak fitur, pemilihan feature classifier Naive Bayes, seperti yang ditunjukkan di gambar berikut, seringkali menghasilkan akurasi prediksi yang lebih baik. | ||

| + | [[File:Rank-and-Test.png|center|600px|thumb]] | ||

==Referensi== | ==Referensi== | ||

Latest revision as of 09:56, 20 April 2020

Sumber: https://docs.biolab.si//3/visual-programming/widgets/data/rank.html

Widget Rank menghitung pemeringkatan atribut dalam klasifikasi atau regresi data.

Input

Data: input dataset Scorer: models for feature scoring

Output

Reduced Data: dataset with selected attributes

Widget Rank mempertimbangkan kumpulan data berlabel class (klasifikasi atau regresi) dan menilai atribut sesuai dengan korelasinya dengan class. Peringkat juga menerima model untuk penilaian, seperti linear regression, logistic regression, random forest, SGD, dll.

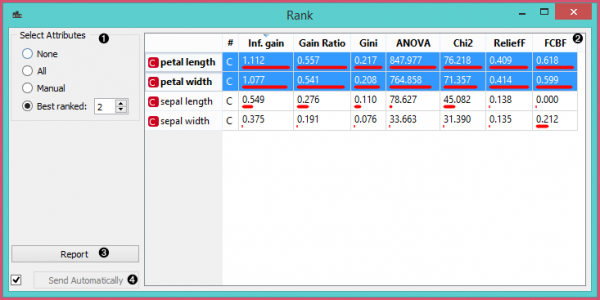

- Select attributes from the data table.

- Data table with attributes (rows) and their scores by different scoring methods (columns)

- Produce a report.

- If ‘Send Automatically’ is ticked, the widget automatically communicates changes to other widgets.

Scoring method

Information Gain: the expected amount of information (reduction of entropy)

- Gain Ratio: a ratio of the information gain and the attribute’s intrinsic information, which reduces the bias towards multivalued features that occurs in information gain

- Gini: the inequality among values of a frequency distribution

- ANOVA: the difference between average vaules of the feature in different classes

- Chi2: dependence between the feature and the class as measure by the chi-square statistic

- ReliefF: the ability of an attribute to distinguish between classes on similar data instances

- FCBF (Fast Correlation Based Filter): entropy-based measure, which also identifies redundancy due to pairwise correlations between features

Selain itu, kita dapat menghubungkan learner tertentu yang memungkinkan penilaian feature berdasarkan seberapa penting mereka dalam model yang dibuat learner (mis. Linear Regression / Logistic Regression, Random Forest, SGD).

Example: Attribute Ranking and Selection

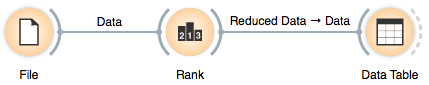

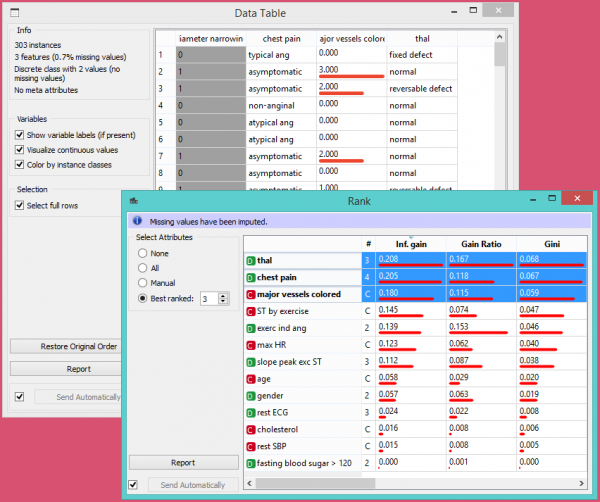

Di bawah ini, kita menggunakan widget Rank segera setelah widget File untuk mengurangi set atribut data dan hanya menyertakan yang paling informatif:

Perhatikan bagaimana widget Rank menghasilkan set data yang hanya menyertakan atribut dengan skor terbaik:

Example: Feature Subset Selection for Machine Learning

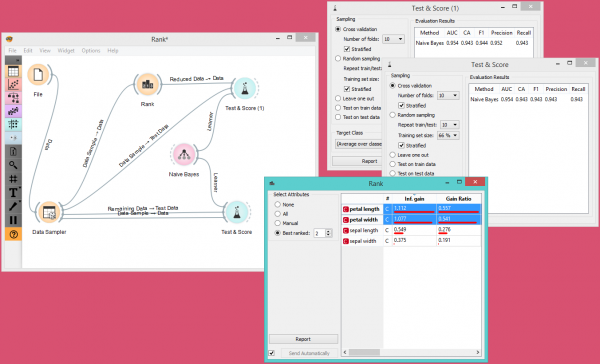

Contoh yang lebih rumit dari widget Rank, dalam workflow di bawah ini, kita pertama-tama membagi data menjadi satu set train dan satu set test. Di cabang atas, data training melewatikan ke widget Rank untuk memilih atribut yang paling informatif, sedangkan di cabang bawah tidak ada pemilihan feature. Baik set data feature yang dipilih dan original dilewatkan ke widget Test & Score mereka sendiri, yang mengembangkan classifier widget Naive Bayes dan menilainya pada set test.

Untuk dataset dengan banyak fitur, pemilihan feature classifier Naive Bayes, seperti yang ditunjukkan di gambar berikut, seringkali menghasilkan akurasi prediksi yang lebih baik.