Difference between revisions of "Orange: Confusion Matrix"

Onnowpurbo (talk | contribs) |

Onnowpurbo (talk | contribs) (→Contoh) |

||

| (3 intermediate revisions by the same user not shown) | |||

| Line 3: | Line 3: | ||

| − | + | Memperlihatkan proporsi antara predicted dan actual class. | |

| − | + | ==Input== | |

Evaluation results: results of testing classification algorithms | Evaluation results: results of testing classification algorithms | ||

| − | + | ==Output== | |

Selected Data: data subset selected from confusion matrix | Selected Data: data subset selected from confusion matrix | ||

Data: data with the additional information on whether a data instance was selected | Data: data with the additional information on whether a data instance was selected | ||

| − | + | Confusion Matrix memberikan jumlah / proporsi instance antara predicted dan actual class. Pemilihan elemen-elemen dalam matriks memberikan instance yang sesuai ke dalam output. Dengan cara ini, kita dapat mengamati instance spesifik mana yang salah klasifikasi dan bagaimana. | |

| − | + | Widget biasanya mendapatkan hasil evaluasi dari Test & Score; contoh skema ditunjukkan di bawah ini. | |

[[File:ConfusionMatrix-stamped.png|center|200px|thumb]] | [[File:ConfusionMatrix-stamped.png|center|200px|thumb]] | ||

| − | + | * When evaluation results contain data on multiple learning algorithms, we have to choose one in the Learners box. The snapshot shows the confusion matrix for Tree and Naive Bayesian models trained and tested on the iris data. The right-hand side of the widget contains the matrix for the naive Bayesian model (since this model is selected on the left). Each row corresponds to a correct class, while columns represent the predicted classes. For instance, four instances of Iris-versicolor were misclassified as Iris-virginica. The rightmost column gives the number of instances from each class (there are 50 irises of each of the three classes) and the bottom row gives the number of instances classified into each class (e.g., 48 instances were classified into virginica). | |

| − | + | * In Show, we select what data we would like to see in the matrix. | |

| − | + | ** Number of instances shows correctly and incorrectly classified instances numerically. | |

| − | + | ** Proportions of predicted shows how many instances classified as, say, Iris-versicolor are in which true class; in the table we can read the 0% of them are actually setosae, 88.5% of those classified as versicolor are versicolors, and 7.7% are virginicae. | |

| − | + | ** Proportions of actual shows the opposite relation: of all true versicolors, 92% were classified as versicolors and 8% as virginicae. | |

[[File:ConfusionMatrix-propTrue.png|center|200px|thumb]] | [[File:ConfusionMatrix-propTrue.png|center|200px|thumb]] | ||

| − | + | * In Select, you can choose the desired output. | |

| − | + | ** Correct sends all correctly classified instances to the output by selecting the diagonal of the matrix. | |

| − | + | ** Misclassified selects the misclassified instances. | |

| − | + | ** None annuls the selection. As mentioned before, one can also select individual cells of the table to select specific kinds of misclassified instances (e.g. the versicolors classified as virginicae). | |

| − | + | ||

| − | + | * When sending selected instances, the widget can add new attributes, such as predicted classes or their probabilities, if the corresponding options Predictions and/or Probabilities are checked. | |

| − | + | * The widget outputs every change if Send Automatically is ticked. If not, the user will need to click Send Selected to commit the changes. | |

| + | * Produce a report. | ||

==Contoh== | ==Contoh== | ||

| − | + | Workflow berikut mendemonstrasikan penggunakan Widget Confusion Matrix | |

[[File:ConfusionMatrix-Schema.png|center|200px|thumb]] | [[File:ConfusionMatrix-Schema.png|center|200px|thumb]] | ||

| − | Test & Score | + | Test & Score memperoleh data dari File dan dua learning algorithm dari Naive Bayes dan Tree. Test & Score melakukan cross-validation atau prosedur train-and-test lainnya untuk memperoleh class prediction oleh ke dua algorima untuk semua (atau sebagian) data instance. Hasil test dikirimkan ke Confusion Matrix, dimana kita dapat mengamati berapa instance yang misclassified dan kenapa. |

| − | + | Dalam output, kita menggunakan Tabel Data untuk menunjukkan contoh yang kita pilih dalam confusion matrix. Jika kita, misalnya, klik Misclassified, tabel akan berisi semua instance yang salah diklasifikasikan oleh metode yang dipilih. | |

| − | + | Scatter Plot mendapat dua set data. Dari widget File, ia mendapatkan data lengkap, sementara confusion matrix hanya mengirim data yang dipilih, misalnya data misklasifikasi. Scatter Plot akan menampilkan semua data, dengan simbol bold yang mewakili data yang dipilih. | |

[[File:ConfusionMatrix-Example.png|center|200px|thumb]] | [[File:ConfusionMatrix-Example.png|center|200px|thumb]] | ||

| − | |||

| − | |||

| − | |||

==Referensi== | ==Referensi== | ||

Latest revision as of 06:23, 28 February 2020

Sumber: https://docs.biolab.si//3/visual-programming/widgets/evaluate/confusionmatrix.html

Memperlihatkan proporsi antara predicted dan actual class.

Input

Evaluation results: results of testing classification algorithms

Output

Selected Data: data subset selected from confusion matrix Data: data with the additional information on whether a data instance was selected

Confusion Matrix memberikan jumlah / proporsi instance antara predicted dan actual class. Pemilihan elemen-elemen dalam matriks memberikan instance yang sesuai ke dalam output. Dengan cara ini, kita dapat mengamati instance spesifik mana yang salah klasifikasi dan bagaimana.

Widget biasanya mendapatkan hasil evaluasi dari Test & Score; contoh skema ditunjukkan di bawah ini.

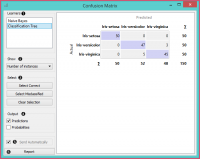

- When evaluation results contain data on multiple learning algorithms, we have to choose one in the Learners box. The snapshot shows the confusion matrix for Tree and Naive Bayesian models trained and tested on the iris data. The right-hand side of the widget contains the matrix for the naive Bayesian model (since this model is selected on the left). Each row corresponds to a correct class, while columns represent the predicted classes. For instance, four instances of Iris-versicolor were misclassified as Iris-virginica. The rightmost column gives the number of instances from each class (there are 50 irises of each of the three classes) and the bottom row gives the number of instances classified into each class (e.g., 48 instances were classified into virginica).

- In Show, we select what data we would like to see in the matrix.

- Number of instances shows correctly and incorrectly classified instances numerically.

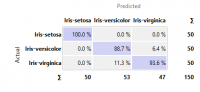

- Proportions of predicted shows how many instances classified as, say, Iris-versicolor are in which true class; in the table we can read the 0% of them are actually setosae, 88.5% of those classified as versicolor are versicolors, and 7.7% are virginicae.

- Proportions of actual shows the opposite relation: of all true versicolors, 92% were classified as versicolors and 8% as virginicae.

- In Select, you can choose the desired output.

- Correct sends all correctly classified instances to the output by selecting the diagonal of the matrix.

- Misclassified selects the misclassified instances.

- None annuls the selection. As mentioned before, one can also select individual cells of the table to select specific kinds of misclassified instances (e.g. the versicolors classified as virginicae).

- When sending selected instances, the widget can add new attributes, such as predicted classes or their probabilities, if the corresponding options Predictions and/or Probabilities are checked.

- The widget outputs every change if Send Automatically is ticked. If not, the user will need to click Send Selected to commit the changes.

- Produce a report.

Contoh

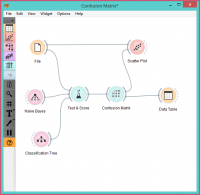

Workflow berikut mendemonstrasikan penggunakan Widget Confusion Matrix

Test & Score memperoleh data dari File dan dua learning algorithm dari Naive Bayes dan Tree. Test & Score melakukan cross-validation atau prosedur train-and-test lainnya untuk memperoleh class prediction oleh ke dua algorima untuk semua (atau sebagian) data instance. Hasil test dikirimkan ke Confusion Matrix, dimana kita dapat mengamati berapa instance yang misclassified dan kenapa.

Dalam output, kita menggunakan Tabel Data untuk menunjukkan contoh yang kita pilih dalam confusion matrix. Jika kita, misalnya, klik Misclassified, tabel akan berisi semua instance yang salah diklasifikasikan oleh metode yang dipilih.

Scatter Plot mendapat dua set data. Dari widget File, ia mendapatkan data lengkap, sementara confusion matrix hanya mengirim data yang dipilih, misalnya data misklasifikasi. Scatter Plot akan menampilkan semua data, dengan simbol bold yang mewakili data yang dipilih.