Difference between revisions of "Keras: Activation Function"

Onnowpurbo (talk | contribs) (Created page with "Sumber: https://towardsdatascience.com/activation-functions-in-neural-networks-58115cda9c96 ==What are activation functions?== Activation functions also known as transfer...") |

Onnowpurbo (talk | contribs) |

||

| Line 15: | Line 15: | ||

→ F(x) = x | → F(x) = x | ||

| − | Identity Function | + | |

| + | [[File:Identity-function.png|center|200px|thumb|Identity Function]] | ||

→ You will get the exact same curve. | → You will get the exact same curve. | ||

| Line 21: | Line 22: | ||

→ Input maps to same output. | → Input maps to same output. | ||

| − | + | ===Binary Step=== | |

| − | Binary Step Function | + | [[File:Binary-step.png|center|200px|thumb|Binary Step Function]] |

→Very useful in classifiers | →Very useful in classifiers | ||

| − | + | ===Logistic or Sigmoid=== | |

| − | Logistic/Sigmoid Function | + | [[File:Logistic-sigmoid.png|center|200px|thumb|Logistic/Sigmoid Function]] |

→ Maps any sized inputs to outputs in range [0,1]. | → Maps any sized inputs to outputs in range [0,1]. | ||

| Line 35: | Line 36: | ||

→ Useful in neural networks. | → Useful in neural networks. | ||

| − | + | ===Tanh=== | |

| − | Tanh Function | + | [[File:Tanh.png|center|200px|thumb|Tanh Function]] |

→Maps input to output ranging in [-1,1]. | →Maps input to output ranging in [-1,1]. | ||

| Line 43: | Line 44: | ||

→Similar to sigmoid function except it maps output in [-1,1] whereas sigmoid maps output to [0,1]. | →Similar to sigmoid function except it maps output in [-1,1] whereas sigmoid maps output to [0,1]. | ||

| − | + | ===ArcTan=== | |

| − | ArcTan Function | + | [[File:Archtanh.png|center|200px|thumb|ArcTan Function]] |

→ Maps input to output ranging between [-pi/2,pi/2]. | → Maps input to output ranging between [-pi/2,pi/2]. | ||

| Line 51: | Line 52: | ||

→ Similar to sigmoid and tanh function. | → Similar to sigmoid and tanh function. | ||

| − | + | ===Rectified Linear Unit (ReLu)=== | |

ReLu | ReLu | ||

| Line 57: | Line 58: | ||

→ It removes negative part of function. | → It removes negative part of function. | ||

| − | + | ===Leaky ReLu=== | |

Leaky ReLu | Leaky ReLu | ||

Revision as of 05:35, 12 March 2020

Sumber: https://towardsdatascience.com/activation-functions-in-neural-networks-58115cda9c96

What are activation functions?

Activation functions also known as transfer function is used to map input nodes to output nodes in certain fashion.

They are used to impart non linearity

There are many activation functions used in Machine Learning out of which commonly used are listed below :-

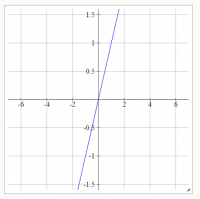

Identity or linear activation function

→ F(x) = x

→ You will get the exact same curve.

→ Input maps to same output.

Binary Step

→Very useful in classifiers

Logistic or Sigmoid

→ Maps any sized inputs to outputs in range [0,1].

→ Useful in neural networks.

Tanh

→Maps input to output ranging in [-1,1].

→Similar to sigmoid function except it maps output in [-1,1] whereas sigmoid maps output to [0,1].

ArcTan

→ Maps input to output ranging between [-pi/2,pi/2].

→ Similar to sigmoid and tanh function.

Rectified Linear Unit (ReLu)

ReLu

→ It removes negative part of function.

Leaky ReLu

Leaky ReLu

→ The only difference between ReLu and Leaky ReLu is it does not completely vanishes the negative part,it just lower its magnitude.

Softmax

→Softmax function is used to impart probabilities when you have more than one outputs you get probability distribution of outputs.

→Useful for finding most probable occurrence of output with respect to other outputs.

— — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — Desirable properties of activation functions

Non Linearity

The purpose of the activation function is to introduce non-linearity into the network in turn allows you to model a response variable (aka target variable, class label, or score) that varies non-linearly with its explanatory variables

Non-linear means that the output cannot be reproduced from a linear combination of the inputs

Another way to think of it: without a non-linear activation function in the network, a NN, no matter how many layers it had, would behave just like a single-layer perceptron, because summing these layers would give you just another linear function (see definition just above).

2) Continuously differentiable

This property is necessary for enabling gradient-based optimization methods.

The binary step activation function is not differentiable at 0, and it differentiates to 0 for all other values, so gradient-based methods can make no progress with it

3) Range

When the range of the activation function is finite, gradient-based training methods tend to be more stable, because pattern presentations significantly affect only limited weights.

When the range is infinite, training is generally more efficient because pattern presentations significantly affect most of the weights. In the latter case, smaller learning rates are typically necessary.

4) Monotonic

When the activation function is monotonic, the error surface associated with a single-layer model is guaranteed to be convex.

5) Approximates identity near the origin

When activation functions have this property, the neural network will learn efficiently when its weights are initialized with small random values.

When the activation function does not approximate identity near the origin, special care must be used when initializing the weights.

— — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — Which activation function you should use for your model?

→As a part of choosing which activation function you should use in your model ,you should try out different functions and choose which fits best to your model.

— — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — For more comprehensive list of activation functions please visit link

— — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — Bibliography

[1] https://en.wikipedia.org/wiki/Activation_function

[2]https://www.youtube.com/watch?v=9vB5nzrL4hY

[3]http://nptel.ac.in/courses/117106100/Module%208/Lecture%202/LECTURE%202.pdf

[5]http://stackoverflow.com/questions/9782071/why-must-a-nonlinear-activation-function-be-used-in-a-backpropagation-neural-net Towards Data Science A Medium publication sharing concepts, ideas, and codes. Follow