Difference between revisions of "Orange: Calibrated Learner"

Onnowpurbo (talk | contribs) (Created page with "Sumber: https://docs.biolab.si//3/visual-programming/widgets/model/calibratedlearner.html Wraps another learner with probability calibration and decision threshold optimizati...") |

Onnowpurbo (talk | contribs) |

||

| Line 6: | Line 6: | ||

Data: input dataset | Data: input dataset | ||

| − | |||

Preprocessor: preprocessing method(s) | Preprocessor: preprocessing method(s) | ||

| − | |||

Base Learner: learner to calibrate | Base Learner: learner to calibrate | ||

| Line 14: | Line 12: | ||

Learner: calibrated learning algorithm | Learner: calibrated learning algorithm | ||

| − | |||

Model: trained model using the calibrated learner | Model: trained model using the calibrated learner | ||

This learner produces a model that calibrates the distribution of class probabilities and optimizes decision threshold. The widget works only for binary classification tasks. | This learner produces a model that calibrates the distribution of class probabilities and optimizes decision threshold. The widget works only for binary classification tasks. | ||

| − | + | [[File:Calibrated-Learner-stamped.png|center|200px|thumb]] | |

| + | |||

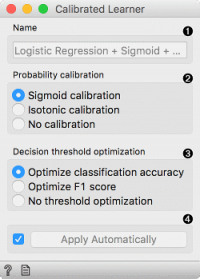

The name under which it will appear in other widgets. Default name is composed of the learner, calibration and optimization parameters. | The name under which it will appear in other widgets. Default name is composed of the learner, calibration and optimization parameters. | ||

Probability calibration: | Probability calibration: | ||

| − | |||

Sigmoid calibration | Sigmoid calibration | ||

| − | |||

Isotonic calibration | Isotonic calibration | ||

| − | |||

No calibration | No calibration | ||

Decision threshold optimization: | Decision threshold optimization: | ||

| − | |||

Optimize classification accuracy | Optimize classification accuracy | ||

| − | |||

Optimize F1 score | Optimize F1 score | ||

| − | |||

No threshold optimization | No threshold optimization | ||

Press Apply to commit changes. If Apply Automatically is ticked, changes are committed automatically. | Press Apply to commit changes. If Apply Automatically is ticked, changes are committed automatically. | ||

| − | + | ==Contoh== | |

A simple example with Calibrated Learner. We are using the titanic data set as the widget requires binary class values (in this case they are ‘survived’ and ‘not survived’). | A simple example with Calibrated Learner. We are using the titanic data set as the widget requires binary class values (in this case they are ‘survived’ and ‘not survived’). | ||

| Line 49: | Line 41: | ||

Comparing the results with the uncalibrated Logistic Regression model we see that the calibrated model performs better. | Comparing the results with the uncalibrated Logistic Regression model we see that the calibrated model performs better. | ||

| − | + | [[File:Calibrated-Learner-Example.png|center|200px|thumb]] | |

Revision as of 08:48, 23 January 2020

Sumber: https://docs.biolab.si//3/visual-programming/widgets/model/calibratedlearner.html

Wraps another learner with probability calibration and decision threshold optimization.

Inputs

Data: input dataset Preprocessor: preprocessing method(s) Base Learner: learner to calibrate

Outputs

Learner: calibrated learning algorithm Model: trained model using the calibrated learner

This learner produces a model that calibrates the distribution of class probabilities and optimizes decision threshold. The widget works only for binary classification tasks.

The name under which it will appear in other widgets. Default name is composed of the learner, calibration and optimization parameters.

Probability calibration:

Sigmoid calibration

Isotonic calibration

No calibration

Decision threshold optimization:

Optimize classification accuracy

Optimize F1 score

No threshold optimization

Press Apply to commit changes. If Apply Automatically is ticked, changes are committed automatically.

Contoh

A simple example with Calibrated Learner. We are using the titanic data set as the widget requires binary class values (in this case they are ‘survived’ and ‘not survived’).

We will use Logistic Regression as the base learner which will we calibrate with the default settings, that is with sigmoid optimization of distribution values and by optimizing the CA.

Comparing the results with the uncalibrated Logistic Regression model we see that the calibrated model performs better.