Difference between revisions of "Orange: Text Preprocessing"

Onnowpurbo (talk | contribs) (Created page with "Sumber: https://orange3-text.readthedocs.io/en/latest/widgets/preprocesstext.html Pemrosesan Corpus berdasarkan metoda yang kita pilih. ==Input== Corpus: Kumpulan Dokumen...") |

Onnowpurbo (talk | contribs) (→Contoh) |

||

| (20 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

| − | + | Widget Preprocess Text mem-prosesan Corpus berdasarkan metoda yang kita pilih. | |

==Input== | ==Input== | ||

| Line 12: | Line 12: | ||

Corpus: Preprocess corpus. | Corpus: Preprocess corpus. | ||

| − | Preprocess Text | + | widget Preprocess Text dapat membagi text menjadi unit yang lebih kecil (token), memfilternya, menjalankan normalisasi (stemming, lemmatization), membuat n-gram dan menandai token dengan label part-of-speech. Langkah-langkah dalam analisis diterapkan secara berurutan dan dapat di-on-kan atau di-off-kan. |

| − | + | [[File:Preprocess-Text-stamped.png|center|600px|tumb]] | |

| − | + | * Informasi tentang data yang diproses sebelumnya. Document count menghitung dan melaporkan jumlah dokumen pada input. Total token count menghitung semua token dalam corpus. Token unik tidak termasuk token duplikat dan melaporkan hanya token unik di corpus. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | * Transformation transforms input data. It applies lowercase transformation by default. | |

| − | + | ** Lowercase will turn all text to lowercase. | |

| + | ** Remove accents will remove all diacritics/accents in text. naïve → naive | ||

| + | ** Parse html will detect html tags and parse out text only. <a href…>Some text</a> → Some text | ||

| + | ** Remove urls will remove urls from text. This is a http://orange.biolab.si/ url. → This is a url. | ||

| − | + | * Tokenization is the method of breaking the text into smaller components (words, sentences, bigrams). | |

| + | ** Word & Punctuation will split the text by words and keep punctuation symbols. This example. → (This), (example), (.) | ||

| + | ** Whitespace will split the text by whitespace only. This example. → (This), (example.) | ||

| + | ** Sentence will split the text by full stop, retaining only full sentences. This example. Another example. → (This example.), (Another example.) | ||

| + | ** Regexp will split the text by provided regex. It splits by words only by default (omits punctuation). | ||

| + | ** Tweet will split the text by pre-trained Twitter model, which keeps hashtags, emoticons and other special symbols. This example. :-) #simple → (This), (example), (.), (:-)), (#simple) | ||

| − | + | * Normalization applies stemming and lemmatization to words. (I’ve always loved cats. → I have alway love cat.) For languages other than English use Snowball Stemmer (offers languages available in its NLTK implementation). | |

| − | + | ** Porter Stemmer applies the original Porter stemmer. | |

| + | ** Snowball Stemmer applies an improved version of Porter stemmer (Porter2). Set the language for normalization, default is English. | ||

| + | ** WordNet Lemmatizer applies a networks of cognitive synonyms to tokens based on a large lexical database of English. | ||

| − | + | * Filtering removes or keeps a selection of words. | |

| + | ** Stopwords removes stopwords from text (e.g. removes ‘and’, ‘or’, ‘in’…). Select the language to filter by, English is set as default. You can also load your own list of stopwords provided in a simple *.txt file with one stopword per line. ../_images/stopwords.png Click ‘browse’ icon to select the file containing stopwords. If the file was properly loaded, its name will be displayed next to pre-loaded stopwords. Change ‘English’ to ‘None’ if you wish to filter out only the provided stopwords. Click ‘reload’ icon to reload the list of stopwords. | ||

| + | ** Lexicon keeps only words provided in the file. Load a *.txt file with one word per line to use as lexicon. Click ‘reload’ icon to reload the lexicon. | ||

| + | ** Regexp removes words that match the regular expression. Default is set to remove punctuation. | ||

| + | ** Document frequency keeps tokens that appear in not less than and not more than the specified number / percentage of documents. If you provide integers as parameters, it keeps only tokens that appear in the specified number of documents. E.g. DF = (3, 5) keeps only tokens that appear in 3 or more and 5 or less documents. If you provide floats as parameters, it keeps only tokens that appear in the specified percentage of documents. E.g. DF = (0.3, 0.5) keeps only tokens that appear in 30% to 50% of documents. Default returns all tokens. | ||

| + | ** Most frequent tokens keeps only the specified number of most frequent tokens. Default is a 100 most frequent tokens. | ||

| + | |||

| + | * N-grams Range akan membuat n-gram dari token. Number men-spesifikasikan range dari n-gram. Default adalah one-gram dan two-gram. | ||

| − | .. | + | * POS Tagger runs part-of-speech tagging on tokens. |

| + | ** Averaged Perceptron Tagger runs POS tagging with Matthew Honnibal’s averaged perceptron tagger. | ||

| + | ** Treebank POS Tagger (MaxEnt) runs POS tagging with a trained Penn Treebank model. | ||

| + | ** Stanford POS Tagger runs a log-linear part-of-speech tagger designed by Toutanova et al. Please download it from the provided website and load it in Orange. You have to load the language-specific model in Model and load stanford-postagger.jar in the Tagger section. | ||

| + | * Produce a report. | ||

| + | ** If Commit Automatically is on, changes are communicated automatically. Alternatively press Commit. | ||

| − | + | '''Catatan!''' Preprocess Text menerapkan langkah preprocessing sesuai dengan urutannya. Ini berarti pertama-tama akan mengubah teks, kemudian menerapkan tokenization, tag POS, normalisasi, filtering / penyaringan dan akhirnya membangun n-gram berdasarkan token yang diberikan. Ini sangat penting untuk WordNet Lemmatizer karena memerlukan tag POS untuk normalisasi yang tepat. | |

| − | + | ==Regular Expression yang berguna== | |

| − | + | Berikut adalah beberapa regular expression yang berguna untuk quick filtering: | |

| + | \bword\b: matches exact word | ||

| + | \w+: matches only words, no punctuation | ||

| + | \b(B|b)\w+\b: matches words beginning with the letter b | ||

| + | \w{4,}: matches words that are longer than 4 characters | ||

| + | \b\w+(Y|y)\b: matches words ending with the letter y | ||

| + | |||

| + | ==Contoh== | ||

| + | |||

| + | Dalam contoh ini, kita akan mengamati efek preprocessing pada teks. Kita bekerja dengan book-excerpts.tab yang kita load dengan widget [[Corpus]]. Hubungkan widget [[Preprocess Text]] ke widget [[Corpus]] dan mempertahankan metode preprocessing default (huruf kecil, tokenization per kata, dan penghapusan stopword). Satu-satunya parameter tambahan yang kita tambahkan sebagai keluaran hanya 100 token paling sering pertama. Kemudian kita menghubungkan widget [[Preprocess Text]] dengan widget [[Word Cloud]] untuk mengamati kata-kata yang paling sering ada dalam teks. Bermain-main dengan parameter yang berbeda, untuk melihat bagaimana mereka mengubah output. | ||

| + | |||

| + | [[File:Preprocess-Text-Example1.png|center|600px|thumb]] | ||

| + | |||

| + | Contoh berikut ini sedikit lebih kompleks. Kita mengambil data menggunakan widget Twitter. Kita mengambil menggunakan internet untuk tweet dari pengguna @HillaryClinton dan @realDonaldTrump dan mendapatkan tweet mereka selama dua minggu, total 242. | ||

| + | |||

| + | Di widget [[Preprocess Text]] tersedia tokenisasi Tweet, yang menyimpan hashtag, emoji, mention dan sebagainya. Namun, tokenizer ini tidak menghilangkan tanda baca, jadi kita memperluas Regexp filtering dengan simbol yang ingin kita singkirkan. Kita akan memperoleh token dengan hanya kata, yang kita tampilkan di widget Word Cloud. Kemudian kita dapat membuat skema untuk memprediksi Author tweet berdasarkan konten tweet, yang dijelaskan lebih rinci dalam dokumentasi untuk widget [[Orange: Twitter]]. | ||

| + | |||

| + | [[File:Preprocess-Text-Example2.png|center|600px|thumb]] | ||

==Referensi== | ==Referensi== | ||

Latest revision as of 09:15, 12 April 2020

Sumber: https://orange3-text.readthedocs.io/en/latest/widgets/preprocesstext.html

Widget Preprocess Text mem-prosesan Corpus berdasarkan metoda yang kita pilih.

Input

Corpus: Kumpulan Dokumen.

Output

Corpus: Preprocess corpus.

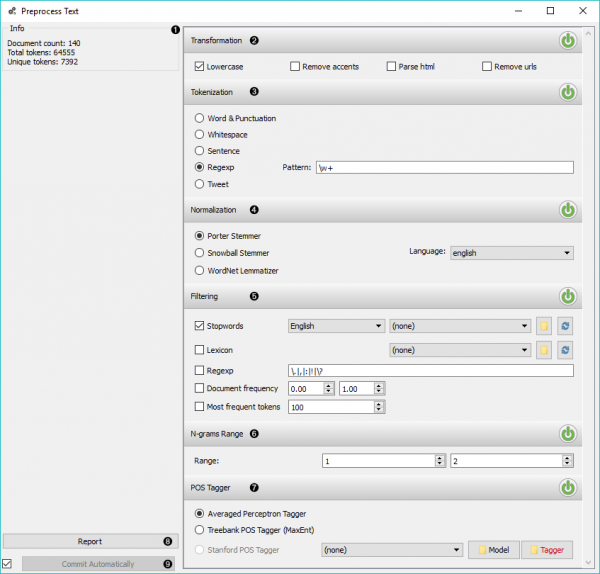

widget Preprocess Text dapat membagi text menjadi unit yang lebih kecil (token), memfilternya, menjalankan normalisasi (stemming, lemmatization), membuat n-gram dan menandai token dengan label part-of-speech. Langkah-langkah dalam analisis diterapkan secara berurutan dan dapat di-on-kan atau di-off-kan.

- Informasi tentang data yang diproses sebelumnya. Document count menghitung dan melaporkan jumlah dokumen pada input. Total token count menghitung semua token dalam corpus. Token unik tidak termasuk token duplikat dan melaporkan hanya token unik di corpus.

- Transformation transforms input data. It applies lowercase transformation by default.

- Lowercase will turn all text to lowercase.

- Remove accents will remove all diacritics/accents in text. naïve → naive

- Parse html will detect html tags and parse out text only. <a href…>Some text</a> → Some text

- Remove urls will remove urls from text. This is a http://orange.biolab.si/ url. → This is a url.

- Tokenization is the method of breaking the text into smaller components (words, sentences, bigrams).

- Word & Punctuation will split the text by words and keep punctuation symbols. This example. → (This), (example), (.)

- Whitespace will split the text by whitespace only. This example. → (This), (example.)

- Sentence will split the text by full stop, retaining only full sentences. This example. Another example. → (This example.), (Another example.)

- Regexp will split the text by provided regex. It splits by words only by default (omits punctuation).

- Tweet will split the text by pre-trained Twitter model, which keeps hashtags, emoticons and other special symbols. This example. :-) #simple → (This), (example), (.), (:-)), (#simple)

- Normalization applies stemming and lemmatization to words. (I’ve always loved cats. → I have alway love cat.) For languages other than English use Snowball Stemmer (offers languages available in its NLTK implementation).

- Porter Stemmer applies the original Porter stemmer.

- Snowball Stemmer applies an improved version of Porter stemmer (Porter2). Set the language for normalization, default is English.

- WordNet Lemmatizer applies a networks of cognitive synonyms to tokens based on a large lexical database of English.

- Filtering removes or keeps a selection of words.

- Stopwords removes stopwords from text (e.g. removes ‘and’, ‘or’, ‘in’…). Select the language to filter by, English is set as default. You can also load your own list of stopwords provided in a simple *.txt file with one stopword per line. ../_images/stopwords.png Click ‘browse’ icon to select the file containing stopwords. If the file was properly loaded, its name will be displayed next to pre-loaded stopwords. Change ‘English’ to ‘None’ if you wish to filter out only the provided stopwords. Click ‘reload’ icon to reload the list of stopwords.

- Lexicon keeps only words provided in the file. Load a *.txt file with one word per line to use as lexicon. Click ‘reload’ icon to reload the lexicon.

- Regexp removes words that match the regular expression. Default is set to remove punctuation.

- Document frequency keeps tokens that appear in not less than and not more than the specified number / percentage of documents. If you provide integers as parameters, it keeps only tokens that appear in the specified number of documents. E.g. DF = (3, 5) keeps only tokens that appear in 3 or more and 5 or less documents. If you provide floats as parameters, it keeps only tokens that appear in the specified percentage of documents. E.g. DF = (0.3, 0.5) keeps only tokens that appear in 30% to 50% of documents. Default returns all tokens.

- Most frequent tokens keeps only the specified number of most frequent tokens. Default is a 100 most frequent tokens.

- N-grams Range akan membuat n-gram dari token. Number men-spesifikasikan range dari n-gram. Default adalah one-gram dan two-gram.

- POS Tagger runs part-of-speech tagging on tokens.

- Averaged Perceptron Tagger runs POS tagging with Matthew Honnibal’s averaged perceptron tagger.

- Treebank POS Tagger (MaxEnt) runs POS tagging with a trained Penn Treebank model.

- Stanford POS Tagger runs a log-linear part-of-speech tagger designed by Toutanova et al. Please download it from the provided website and load it in Orange. You have to load the language-specific model in Model and load stanford-postagger.jar in the Tagger section.

- Produce a report.

- If Commit Automatically is on, changes are communicated automatically. Alternatively press Commit.

Catatan! Preprocess Text menerapkan langkah preprocessing sesuai dengan urutannya. Ini berarti pertama-tama akan mengubah teks, kemudian menerapkan tokenization, tag POS, normalisasi, filtering / penyaringan dan akhirnya membangun n-gram berdasarkan token yang diberikan. Ini sangat penting untuk WordNet Lemmatizer karena memerlukan tag POS untuk normalisasi yang tepat.

Regular Expression yang berguna

Berikut adalah beberapa regular expression yang berguna untuk quick filtering:

\bword\b: matches exact word

\w+: matches only words, no punctuation

\b(B|b)\w+\b: matches words beginning with the letter b

\w{4,}: matches words that are longer than 4 characters

\b\w+(Y|y)\b: matches words ending with the letter y

Contoh

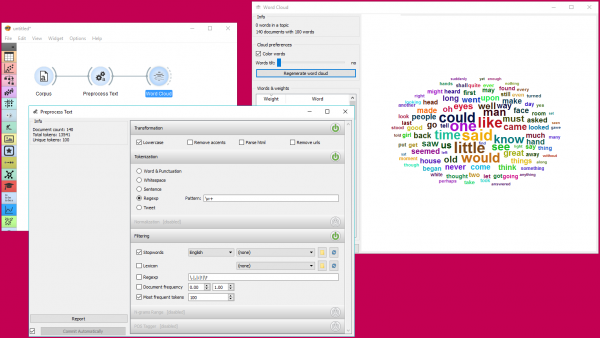

Dalam contoh ini, kita akan mengamati efek preprocessing pada teks. Kita bekerja dengan book-excerpts.tab yang kita load dengan widget Corpus. Hubungkan widget Preprocess Text ke widget Corpus dan mempertahankan metode preprocessing default (huruf kecil, tokenization per kata, dan penghapusan stopword). Satu-satunya parameter tambahan yang kita tambahkan sebagai keluaran hanya 100 token paling sering pertama. Kemudian kita menghubungkan widget Preprocess Text dengan widget Word Cloud untuk mengamati kata-kata yang paling sering ada dalam teks. Bermain-main dengan parameter yang berbeda, untuk melihat bagaimana mereka mengubah output.

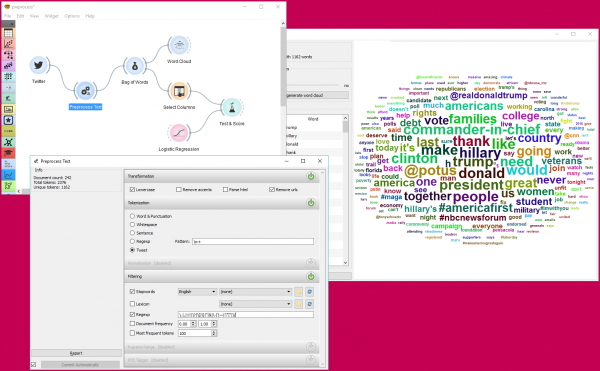

Contoh berikut ini sedikit lebih kompleks. Kita mengambil data menggunakan widget Twitter. Kita mengambil menggunakan internet untuk tweet dari pengguna @HillaryClinton dan @realDonaldTrump dan mendapatkan tweet mereka selama dua minggu, total 242.

Di widget Preprocess Text tersedia tokenisasi Tweet, yang menyimpan hashtag, emoji, mention dan sebagainya. Namun, tokenizer ini tidak menghilangkan tanda baca, jadi kita memperluas Regexp filtering dengan simbol yang ingin kita singkirkan. Kita akan memperoleh token dengan hanya kata, yang kita tampilkan di widget Word Cloud. Kemudian kita dapat membuat skema untuk memprediksi Author tweet berdasarkan konten tweet, yang dijelaskan lebih rinci dalam dokumentasi untuk widget Orange: Twitter.